Chutian Jiang

PhD Candidate in Computing Media and Arts (CMA)

The Hong Kong University of Science and Technology (Guangzhou)

Biography

Chutian Jiang (江楚天) is a PhD Candidate of Computational Media and Arts (CMA) at the APEX Lab in The Hongkong University of Science and Technology (Guangzhou). As a Human-Computer Interaction (HCI) researcher, His research interests include haptic technologies, AI-assisted multimodal education, and accessibility design.

Download my resumé.

- Human-Computer Interaction (HCI)

- Haptic Technologies

- AI-assisted Multimodal Education

- and Accessibility Design.

-

PhD Candidate in Computational Media and Arts

The Hong Kong University of Science and Technology (Guangzhou)

-

MSc in Industrial Chemistry, 2021

National University of Singapore

-

BEng in Special Energy and Pyrotechniques, 2019

Nanjing University of Science and Technology

Experience

Responsibilities include:

- Analysing

- Experiments

- Researching

Featured Publications

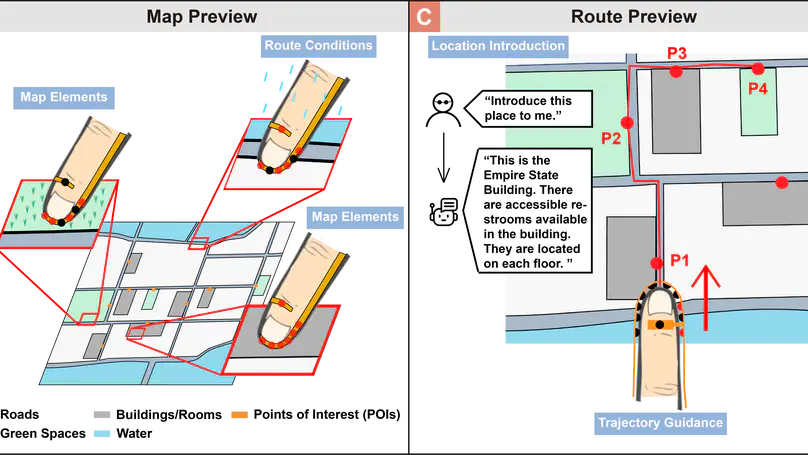

Previewing routes to unfamiliar destinations is a crucial task for many blind and low vision (BLV) individuals to ensure safety and confidence before their journey. While prior work has primarily supported navigation during travel, less research has focused on how best to assist BLV people in previewing routes on a map. We designed a novel electrotactile system around the fingertip and the Trip Preview Assistant (TPA) to convey map elements, route conditions, and trajectories. TPA harnesses large language models (LLMs) to dynamically control and personalize electrotactile feedback, enhancing the interpretability of complex spatial map data for BLV users. In a user study with twelve BLV participants, our system demonstrated improvements in efficiency and user experience for previewing maps and routes. This work contributes to advancing the accessibility of visual map information for BLV users when previewing trips.

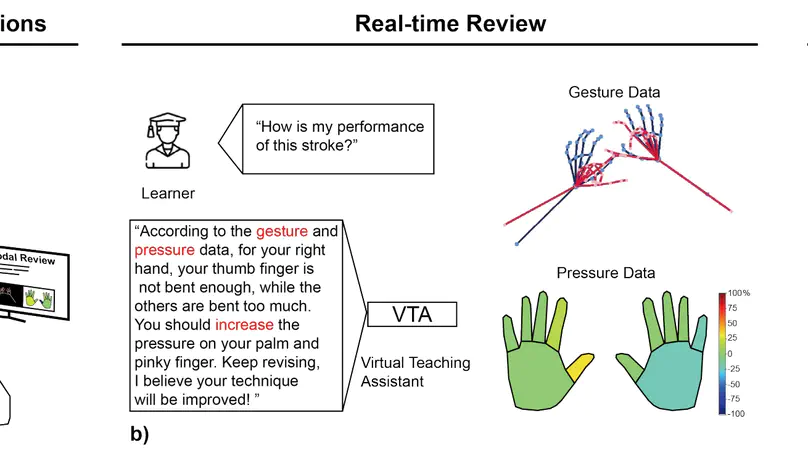

Although remote learning is widely used for delivering and capturing knowledge, it has limitations in teaching hands-on skills that require nuanced instructions and demonstrations of precise actions, such as massage. Furthermore,scheduling conficts between instructors and learners often limit the availability of real-time feedback, reducing learning efciency. To address these challenges, we developed a synthesis tool utilizing an LLM-powered Virtual Teaching Assistant (VTA). This tool integrates multimodal instructions that convey precise data, such as stroke patterns and pressure control, while providing real-time feedback for learners and summarizing their performance for instructors. Our case study with instructors and learners demonstrated the efectiveness of these multimodal instructions and the VTA in enhancing massage teaching and learning. We then discuss the tools’ use in other hands-on skills instruction and cognitive process diferences in various courses.

People who are blind and low vision (BLV) encounter numerous challenges in their daily lives and work. To support them, various haptic assistive tools have been developed. Despite these advancements, the effective utilization of these tools—including the optimal haptic feedback and on-body stimulation positions for different tasks along with their limitations—remains poorly understood. Recognizing these gaps, we conducted a systematic literature review spanning two decades (2004–2024) to evaluate the development of haptic assistive tools within the HCI community. Our findings reveal that these tools are primarily used for understanding graphical information, providing guidance and navigation, and facilitating education and training, among other life and work tasks. We identified three main limitations: hardware limitations, functionality limitations, and UX and evaluation methods limitations. Based on these insights, we discuss potential research avenues and offer suggestions for enhancing the effectiveness of future haptic assistive technologies.

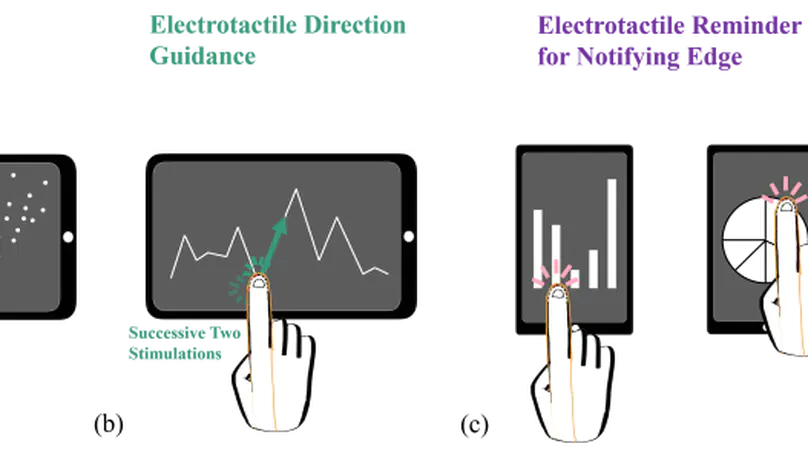

Charts are crucial in conveying information across various fields but are inaccessible to blind and low vision (BLV) people without assistive technology. Chart comprehension tools leveraging haptic feedback have been used widely but are often bulky, expensive, and static, rendering them inefficient for conveying chart data. To increase device portability, enable multitasking, and provide efficient assistance in chart comprehension, we introduce a novel system that delivers unobtrusive modulated electrotactile feedback directly to the fingertip edge. Our three-part study with twelve participants confirmed the effectiveness of this system, demonstrating that electrotactile feedback, when applied for 0.5 seconds with a 0.12-second interval, provides the most accurate position and direction recognition. Furthermore, our electrotactile device has proven valuable in assisting BLV participants in comprehending four commonly used charts: line charts, scatterplots, bar charts, and pie charts. We also delve into the implications of our findings on recognition enhancement, presentation modes, and function synergy.

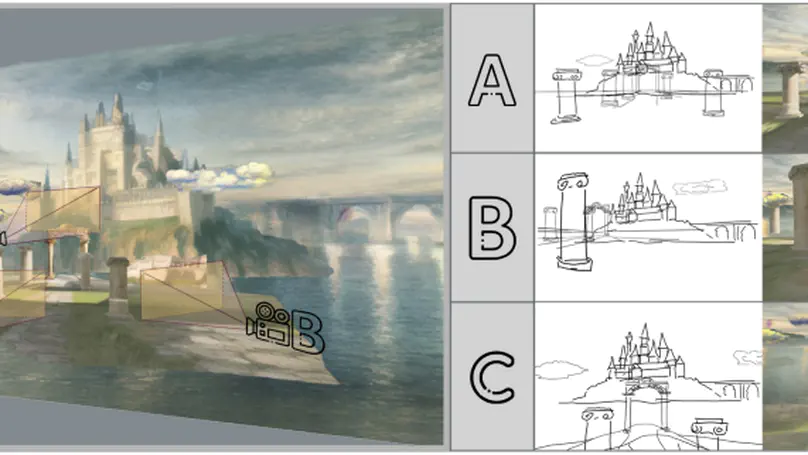

We propose Neural Canvas, a lightweight 3D platform that integrates sketching and a collection of generative AI models to facilitate scenic design prototyping. Compared with traditional 3D tools, sketching in a 3D environment helps designers quickly express spatial ideas, but it does not facilitate the rapid prototyping of scene appearance or atmosphere. Neural Canvas integrates generative AI models into a 3D sketching interface and incorporates four types of projection operations to facilitate 2D-to-3D content creation. Our user study shows that Neural Canvas is an effective creativity support tool, enabling users to rapidly explore visual ideas and iterate 3D scenic designs. It also expedites the creative process for both novices and artists who wish to leverage generative AI technology, resulting in attractive and detailed 3D designs created more efficiently than using traditional modeling tools or individual generative AI platforms.

Being able to analyze and derive insights from data, which we call Daily Data Analysis (DDA), is an increasingly important skill in everyday life. While the accessibility community has explored ways to make data more accessible to blind and low-vision (BLV) people, little is known about how BLV people perform DDA. Knowing BLV people’s strategies and challenges in DDA would allow the community to make DDA more accessible to them. Toward this goal, we conducted a mixed-methods study of interviews and think-aloud sessions with BLV people (N=16). Our study revealed five key approaches for DDA (i.e., overview obtaining, column comparison, key statistics identification, note-taking, and data validation) and the associated challenges. We discussed the implications of our findings and highlighted potential directions to make DDA more accessible for BLV people.

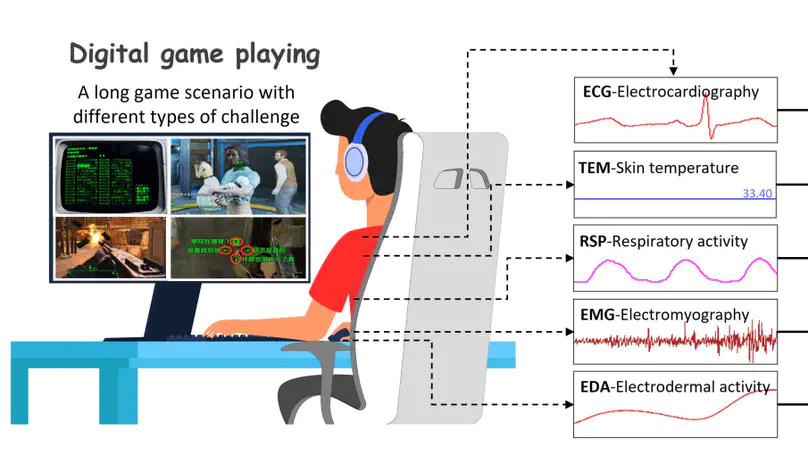

Challenge is the core element of digital games. The wide spectrum of physical, cognitive, and emotional challenge experiences provided by modern digital games can be evaluated subjectively using a questionnaire, the CORGIS, which allows for a post hoc evaluation of the overall experience that occurred during game play. Measuring this experience dynamically and objectively, however, would allow for a more holistic view of the moment-to-moment experiences of players. This study, therefore, explored the potential of detecting perceived challenge from physiological signals. For this, we collected physiological responses from 32 players who engaged in three typical game scenarios. Using perceived challenge ratings from players and extracted physiological features, we applied multiple machine learning methods and metrics to detect challenge experiences. Results show that most methods achieved a …

As touch interactions become ubiquitous in the field of human computer interactions, it is critical to enrich haptic feedback to improve efficiency, accuracy, and immersive experiences. This paper presents HapTag, a thin and flexible actuator to support the integration of push button tactile renderings to daily soft surfaces. Specifically, HapTag works under the principle of hydraulically amplified electroactive actuator (HASEL) while being optimized by embedding a pressure sensing layer, and being activated with a dedicated voltage appliance in response to users’ input actions, resulting in fast response time, controllable and expressive push-button tactile rendering capabilities. HapTag is in a compact formfactor and can be attached, integrated, or embedded on various soft surfaces like cloth, leather, and rubber. Three common push button tactile patterns were adopted and implemented with HapTag. We validated the feasibility and expressiveness of HapTag by demonstrating a series of innovative applications under different circumstances.

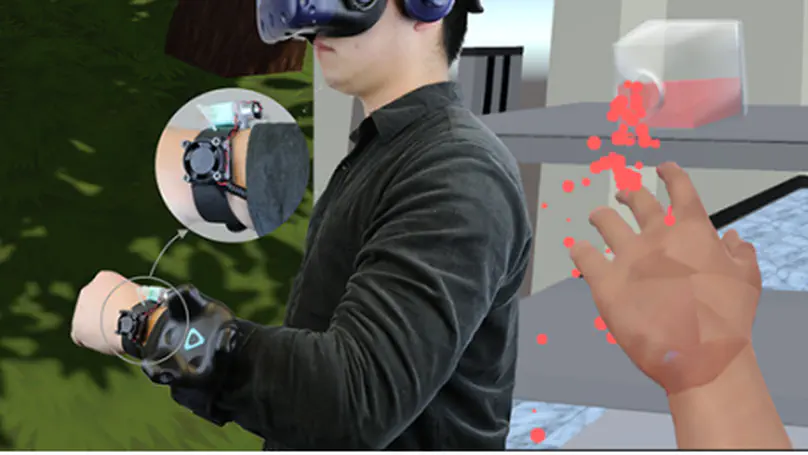

The imitation of pain sensation in Virtual Reality is considered valuable for safety education and training but has been seldom studied. This paper presents Douleur, a wearable haptic device that renders intensity-adjustable pain sensations with chemical stimulants. Different from mechanical, thermal, or electric stimulation, chemical-induced pain is more close to burning sensations and long-lasting. Douleur consists of a microfluidic platform that precisely emits capsaicin onto the skin and a microneedling component to help the stimulant penetrate the epidermis layer to activate the trigeminal nerve efficiently. Moreover, it embeds a Peltier module to apply the heating or cooling stimulus to the affected area to adjust the level of pain on the skin. To better understand how people would react to the chemical stimulant, we conducted a first study to quantify the enhancement of the sensation by changing the capsaicin concentration, skin temperature, and time and to determine suitable capsaicin concentration levels. In the second study, we demonstrated that Douleur could render a variety of pain sensations in corresponding virtual reality applications. In sum, Douleur is the first wearable prototype that leverages a combination of capsaicin and Peltier to induce rich pain sensations and opens up a wide range of applications for safety education and more.

Recent Publications

Contact

- cdswjct@gmail.com

- Haidian District, Beijing, 100000

- Monday - Friday 8:00 to 18:00

- DM Me

- Zoom Me